Ongoing projects

Privacy and Security of Technology Ecosystems at Higher Education Institutes

Higher education institutes (HEIs) are quickly becoming complex ecosystems of various computing technologies handling diverse data about teaching, research, lifestyles, and mobility on campus, health and finances, and immigration status. These technologies and services are provided by, in most cases, third-party for-profit vendors, and their integration creates complex ecosystems that often lack transparency and auditability, as identified in our recent paper.

This opaque collection of systems and data flows among them, which cannot be monitored by HEI personnel, creates numerous opportunities for privacy invasion as well as the safety and well-being of the data subjects. Simultaneously, the massive amounts of data these technologies collect are making HEIs lucrative targets for cyber attackers.

Our research is shedding light on these emerging problems through qualitative explorations, as well as developing tools and frameworks for identifying and quantifying privacy and security issues, and innovating protective mechanisms.

Higher education institutes (HEIs) are quickly becoming complex ecosystems of various computing technologies handling diverse data about teaching, research, lifestyles, and mobility on campus, health and finances, and immigration status. These technologies and services are provided by, in most cases, third-party for-profit vendors, and their integration creates complex ecosystems that often lack transparency and auditability, as identified in our recent paper.

This opaque collection of systems and data flows among them, which cannot be monitored by HEI personnel, creates numerous opportunities for privacy invasion as well as the safety and well-being of the data subjects. Simultaneously, the massive amounts of data these technologies collect are making HEIs lucrative targets for cyber attackers.

Our research is shedding light on these emerging problems through qualitative explorations, as well as developing tools and frameworks for identifying and quantifying privacy and security issues, and innovating protective mechanisms.

Methods: interviews and surveys, system development on mobile and web platforms, network measurement and traffic analysis, statistical analysis.

Deception-based Proactive Defense to Prevent Cyberattacks

Coordinated cyber attackers are increasingly employing sophisticated techniques and tactics to compromise critical infrastructures. Current preventive mechanisms are most often reactive and create too many false positive alarms. Recent research has recognized the critical need for proactive defense mechanisms that can prevent an attack from succeeding. Deception-based defense, which attempts to mislead the attackers to waste their time and resources, and simultaneously learns more about their attack strategy to strengthen the defense system, is among the most promising approaches to proactive defense. We are building the next generation of deception mechanisms that will dynamically adapt to the attacker’s attack strategy. By analyzing attacker personality traits, TODDE predicts their next moves and deploys customized deception tactics, such as AI-powered honeypots that adaptively respond to attacker behavior, making the deception more realistic and effective. This dynamic framework combines psychological insights, advanced AI, and machine learning to enhance cybersecurity defenses through targeted, adaptive deception.

Coordinated cyber attackers are increasingly employing sophisticated techniques and tactics to compromise critical infrastructures. Current preventive mechanisms are most often reactive and create too many false positive alarms. Recent research has recognized the critical need for proactive defense mechanisms that can prevent an attack from succeeding. Deception-based defense, which attempts to mislead the attackers to waste their time and resources, and simultaneously learns more about their attack strategy to strengthen the defense system, is among the most promising approaches to proactive defense. We are building the next generation of deception mechanisms that will dynamically adapt to the attacker’s attack strategy. By analyzing attacker personality traits, TODDE predicts their next moves and deploys customized deception tactics, such as AI-powered honeypots that adaptively respond to attacker behavior, making the deception more realistic and effective. This dynamic framework combines psychological insights, advanced AI, and machine learning to enhance cybersecurity defenses through targeted, adaptive deception.

Methods: causal machine learning, system development for real attack-based experiments, and cognitive psychology.

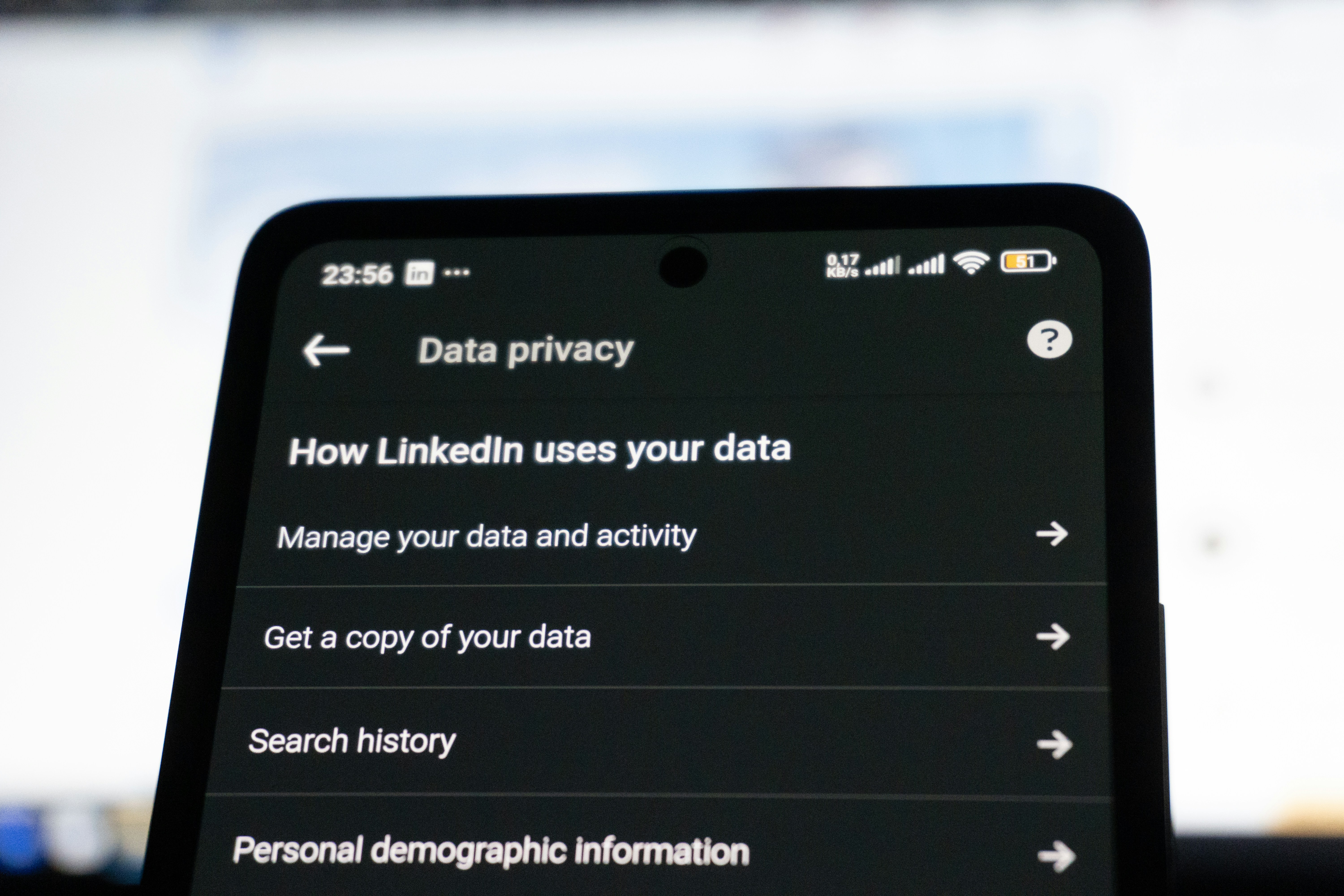

Privacy regulations and their impact assessment

Regulations attempt to protect consumers from privacy invasions by platforms. But how well do they work in practice? This seemingly simple question is difficult to answer in the real world, as platform behaviors change for many reasons, not only regulations. We are taking a quasi-experimental perspective to investigate this question in the wild, where we analyze platform behaviors in the presence and absence of regulatory restrictions to estimate their impacts, if any.

Regulations attempt to protect consumers from privacy invasions by platforms. But how well do they work in practice? This seemingly simple question is difficult to answer in the real world, as platform behaviors change for many reasons, not only regulations. We are taking a quasi-experimental perspective to investigate this question in the wild, where we analyze platform behaviors in the presence and absence of regulatory restrictions to estimate their impacts, if any.

Methods: measurement framework development for mobile and web platforms, network measurement and traffic analysis, statistical analysis.

Interdependent Privacy

Digital technologies collect massive amounts of data from people who are not the users. These include wearable, sensor-rich devices such as augmented or virtual reality cameras, headsets, or glasses that record people around them, voice assistants, as well as applications running on mobile devices that collect, e.g., phone numbers of other people. All this data can be used in ways that threaten he privacy and safety of the data subjects, including tracking their activities and location, stalking and harassment, and fraud and identity theft. This project aims to innovate socio-technical systems and methods for the responsible use of technology and minimizing harm to other people.

Digital technologies collect massive amounts of data from people who are not the users. These include wearable, sensor-rich devices such as augmented or virtual reality cameras, headsets, or glasses that record people around them, voice assistants, as well as applications running on mobile devices that collect, e.g., phone numbers of other people. All this data can be used in ways that threaten he privacy and safety of the data subjects, including tracking their activities and location, stalking and harassment, and fraud and identity theft. This project aims to innovate socio-technical systems and methods for the responsible use of technology and minimizing harm to other people.

Methods: modeling human decision-making, cognitive and behavioral science, user studies, and mobile app development.

Personalized Misinformation

The digital traces we leave behind while browsing the internet, or the intimate details that wearable devices (e.g., fitness trackers) collect, which include physiological and psychological information, provide a lot of clues about our interests, lifestyles, as well as demographics, physical, and cognitive properties. Such clues can be used, or abused, by anyone having access to that data, including entities that can buy this data from data collectors in the marketplace. One of the prominent dangers from such data abuse is personalized misinformation—which might allow an adversary to propagate misinformation in a more effective and efficient way compared to just broadcasting it to everyone.

We are studying if that is possible, i.e., if people are more likely to fall for misinformation when it is personalized, and whether such targeted misinformation propagation is already happening in the wild.

Methods: user studies, internet measurement, traffic analysis.